“Can We Trust AI? CU Boulder Researchers Put Chatbots to the Test—Using Sudoku”

In a groundbreaking experiment, researchers at the University of Colorado Boulder set out to answer a critical question:

How trustworthy are today’s most powerful AI language models when it comes to reasoning and accuracy?

Their test? Not math or code — but Sudoku, the classic puzzle demanding logic and precision.

🔍 The Study

Researchers tested ~2,300 original Sudoku puzzles of varying difficulty.

Models similar to OpenAI’s GPT-4 and Google’s Gemini were evaluated.

Results:

AI performed well on easy puzzles

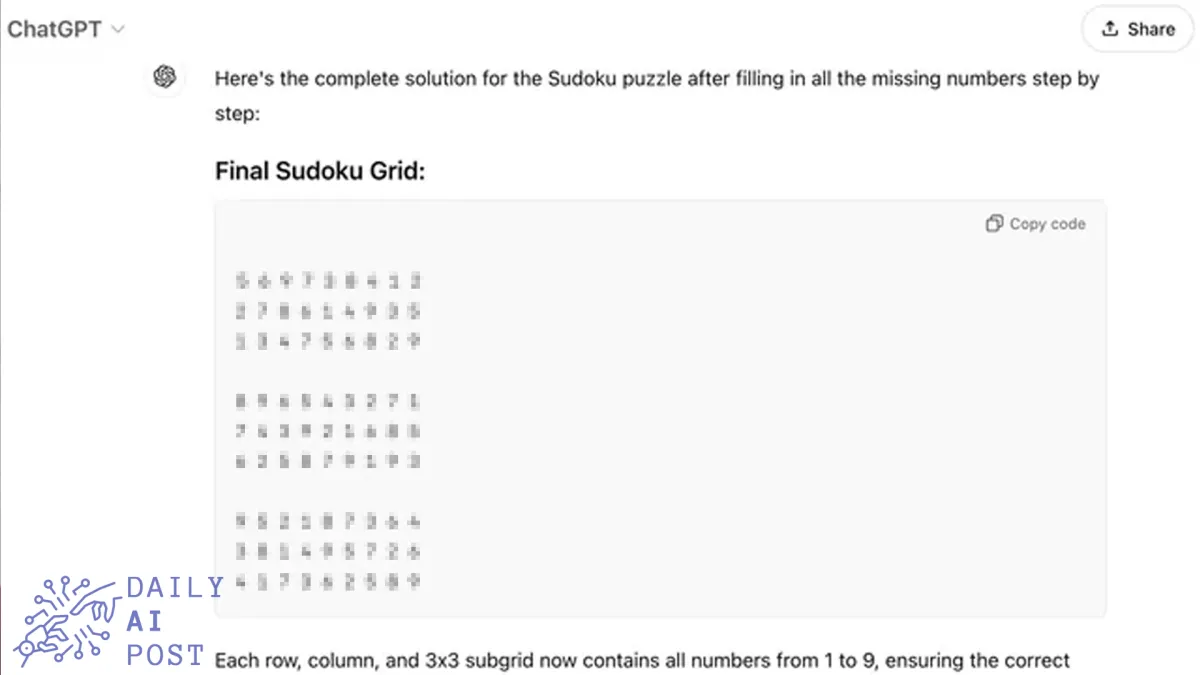

But struggled with harder ones, often:

Failing to explain reasoning

Producing contradictory answers

Delivering confident but incorrect solutions

⚠️ The Real-World Concern

This raises an urgent issue in the U.S. and beyond:

As AI powers tools in education, finance, hiring, and healthcare, can we trust it when accuracy and clarity matter most?

The study reinforces the need for explainable AI — systems that:

Show their logic

Justify their decisions

Avoid being “black boxes” where answers can’t be verified

📈 Why It Matters Now

With AI adoption surging across universities, corporations, and public institutions, this research is a timely reminder:

Accuracy isn’t enough. Trust and transparency must be part of the equation.

❓ The Critical Question

Would you trust an AI to make decisions that affect your grades, bank account, or future —

if it can’t clearly show its work?

Stay informed on the future of artificial intelligence and its impact on everyday life by making DailyAIPost.com part of your daily routine—because in the age of AI, staying ahead means staying updated.